I. The Spiral Evolution of Artificial Intelligence: Lessons from Dartmouth to the Rise and Fall of Expert Systems

Yan Li, Tianyi Zhang

Preface: The Reality and Questions of the Era of Large Models

The explosion of ChatGPT in 2022 ignited a global AI race. Over the past two years, the frenzy of technological advancements and capital investment has propelled generative AI to an almost mythic status. However, as the limits of technological capabilities become increasingly apparent, expectations for Artificial General Intelligence (AGI) have begun to return to a more rational perspective.

At the end of last year, OpenAI experienced internal turmoil, with both Chief Scientist Ilya Sutskever and Chief Technology Officer Mira Murati departing, further adding uncertainty to the AGI pathway centered around large language models.

Meanwhile, China’s AI startup DeepSeek has rapidly risen, achieving performance breakthroughs with open-source models, though its advancements remain confined to the realm of engineering optimization.

As the technological hype gradually subsides, people are starting to seriously contemplate an important question: In an era where computing power and data have become fundamental infrastructure, how can we ensure that artificial intelligence truly serves all members of society?

Origins of AI

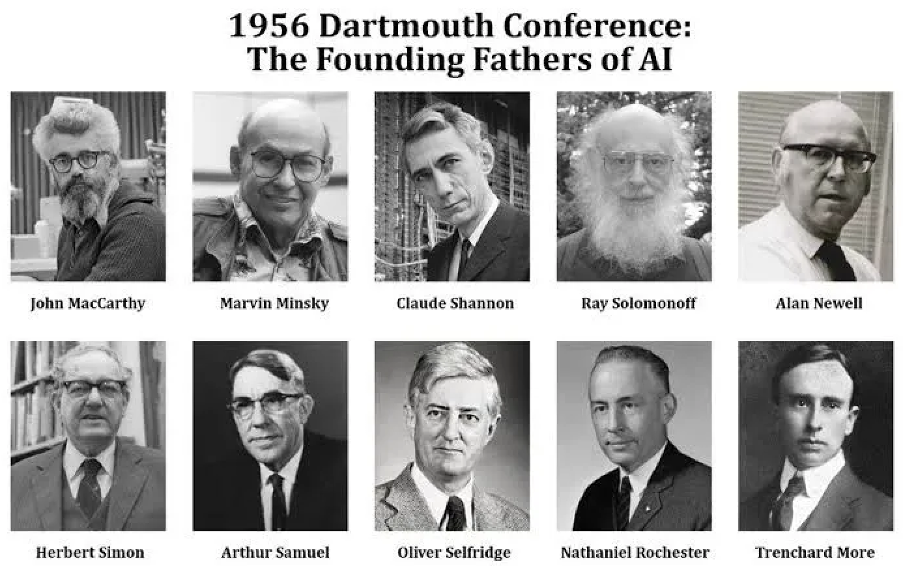

The term Artificial Intelligence (AI) was officially coined in 1956 during the Dartmouth Conference. At this gathering, John McCarthy, along with Marvin Minsky, Claude Shannon, and others, put forth a grand vision: enabling computers to simulate human cognition, reasoning, learning, and problem-solving.

They defined a set of intelligent features that became the core directions for early AI research:

- Symbolic reasoning: Utilizing symbolic systems for knowledge representation and logical inference.

- Natural language processing: Studying how machines comprehend and use human language.

- Machine learning: Exploring the possibility of computers learning from experience.

- Automated reasoning and problem-solving: Enabling machines to solve mathematical problems, logic puzzles, and more.

- Self-improvement: Investigating how machines can learn and optimize themselves during operation.

- Cognitive simulation: Mimicking human thought processes and integrating findings from psychology and cognitive science.

Although these ideas were somewhat immature at the time, they laid the foundation for AI development and outlined two primary research pathways:

- Symbolism: Simulating reasoning based on logical rules.

- Connectionism: Drawing inspiration from biological neural networks to simulate human cognition.

Early AI research developed along these two paths in parallel, a trend that continues to this day.

The 1956 Dartmouth Conference was a milestone in AI history, introducing the concept of artificial intelligence and laying the foundation for research in this field. The image includes pioneering figures such as John McCarthy (the “Father of AI”), Marvin Minsky (a pioneer in machine learning research), and Claude Shannon (the “Father of Information Theory”), whose contributions continue to shape the trajectory of AI development.

Early Development of AI

In the first decade following the Dartmouth Conference, AI research made numerous remarkable advancements. The United States quickly established three major AI research centers, each achieving significant progress in different domains:

-

Carnegie Mellon University (CMU):

Herbert A. Simon and Allen Newell developed the General Problem Solver (GPS), emphasizing the role of symbolic logic in AI and pioneering the application of computer science in psychology. -

IBM:

Arthur Lee Samuel developed a self-learning checkers program, marking an early breakthrough in machine learning, and pushing AI toward dynamic learning capabilities. -

Massachusetts Institute of Technology (MIT):

John McCarthy and Marvin Minsky advanced research in expert systems and logical reasoning-based symbolic AI, while also developing LISP (LISt Processing), which became the standard programming language for symbolic AI.

During this period, due to limited computational resources, symbolic AI dominated, relying on rule-based encoding and knowledge bases.

For example, General Motors launched the industrial robot Unimate, pioneering automation. Joseph Weizenbaum developed ELIZA (1966), an early chatbot that demonstrated human-computer interaction. Neural network research also took shape. In 1957, Frank Rosenblatt developed the Perceptron, which, despite being limited to linear classification problems, laid the groundwork for modern neural networks.

This era of exploration set the stage for future developments in expert systems and pushed AI toward broader horizons.

The First AI Winter and Trust Crisis

However, AI’s development was not without setbacks. After the initial boom, research encountered technical bottlenecks. Symbolic AI relied on rule-based systems, but as problem complexity increased, computational demands grew exponentially, leading to combinatorial explosion. In 1965, mathematician and computer scientist John Alan Robinson proposed Resolution Method to simplify logical reasoning, but it failed to overcome the high computational costs of large-scale inference tasks.

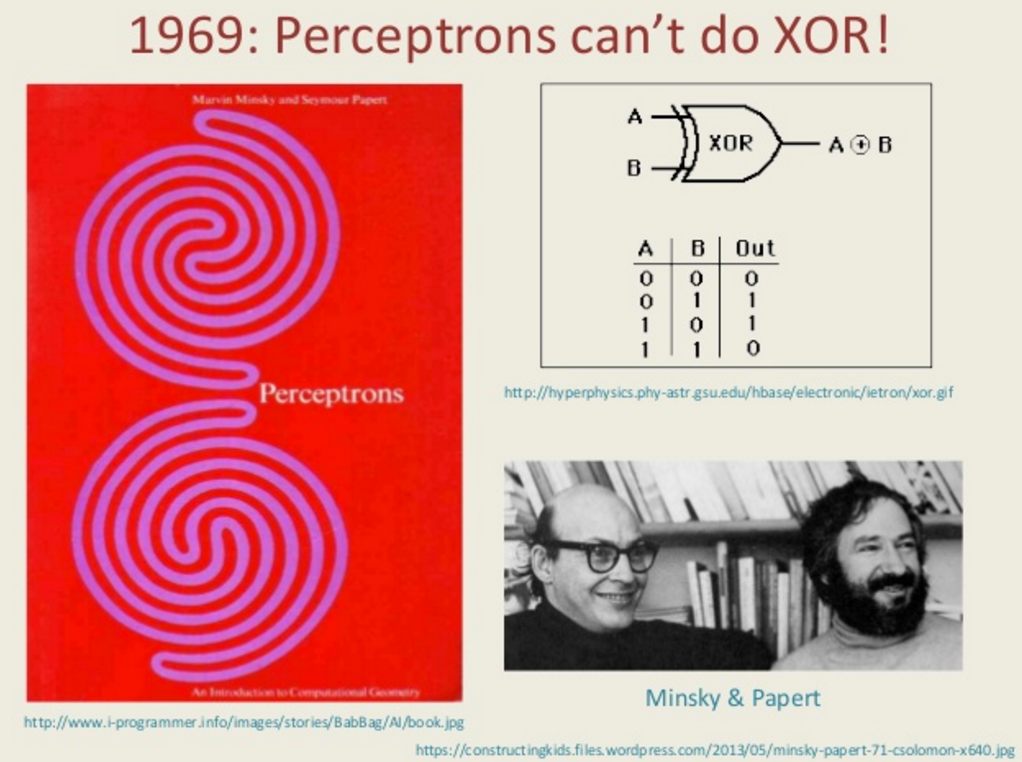

Neural networks also faced a major setback. In 1969, Marvin Minsky and Seymour Papert published Perceptrons, pointing out that single-layer perceptrons could not solve nonlinear classification tasks, such as the XOR problem. This critique led to widespread skepticism about neural networks, drastically reducing research funding and stalling progress in the field.

Compounding these difficulties, machine translation failures further eroded public trust in AI. Researchers initially believed bilingual dictionaries and basic grammar rules would be sufficient for high-quality translation, but the results were riddled with errors. For instance, “The spirit is willing but the flesh is weak” was mistranslated as “The vodka is good but the meat is spoiled.” The U.S. government invested over $20 million in machine translation research, but due to poor results, funding was cut, and projects were abandoned.

These technological setbacks triggered policy repercussions. In 1973, Cambridge mathematician James Lighthill published a damning report criticizing AI’s limitations, leading the British and U.S. governments to withdraw research funding. IBM halted all AI research, and the Defense Advanced Research Projects Agency (DARPA) canceled funding for speech recognition research at CMU. Furthermore, the Mansfield Amendment, passed by the U.S. Congress, restricted basic research funding without clear military applications, exacerbating AI’s financial struggles.

With funding cuts and technological stagnation, AI research entered a deep freeze, known as the First AI Winter, which lasted until the resurgence of AI in the 1980s.

The Second Boom and Winter

The development of any field can be seen as a superposition of multiple waves. While research on general reasoning AI fell into a downturn, AI focused on mimicking human expert decision-making in specific domains—known as expert systems—began to rise. Expert systems stemmed from symbolic AI, simulating human experts’ knowledge and reasoning abilities to assist computers in making decisions within specialized fields.

As early as the late 1950s and 1960s, some AI researchers proposed the core idea that “knowledge is the key to intelligence,” arguing that intelligent behavior requires extensive domain knowledge rather than just reasoning ability. As general reasoning AI encountered bottlenecks, researchers began to realize that knowledge was more critical than reasoning mechanisms and emphasized that the key to intelligent systems lay in how knowledge bases were constructed and utilized.

DENDRAL and MYCIN: The Rise of Expert Systems

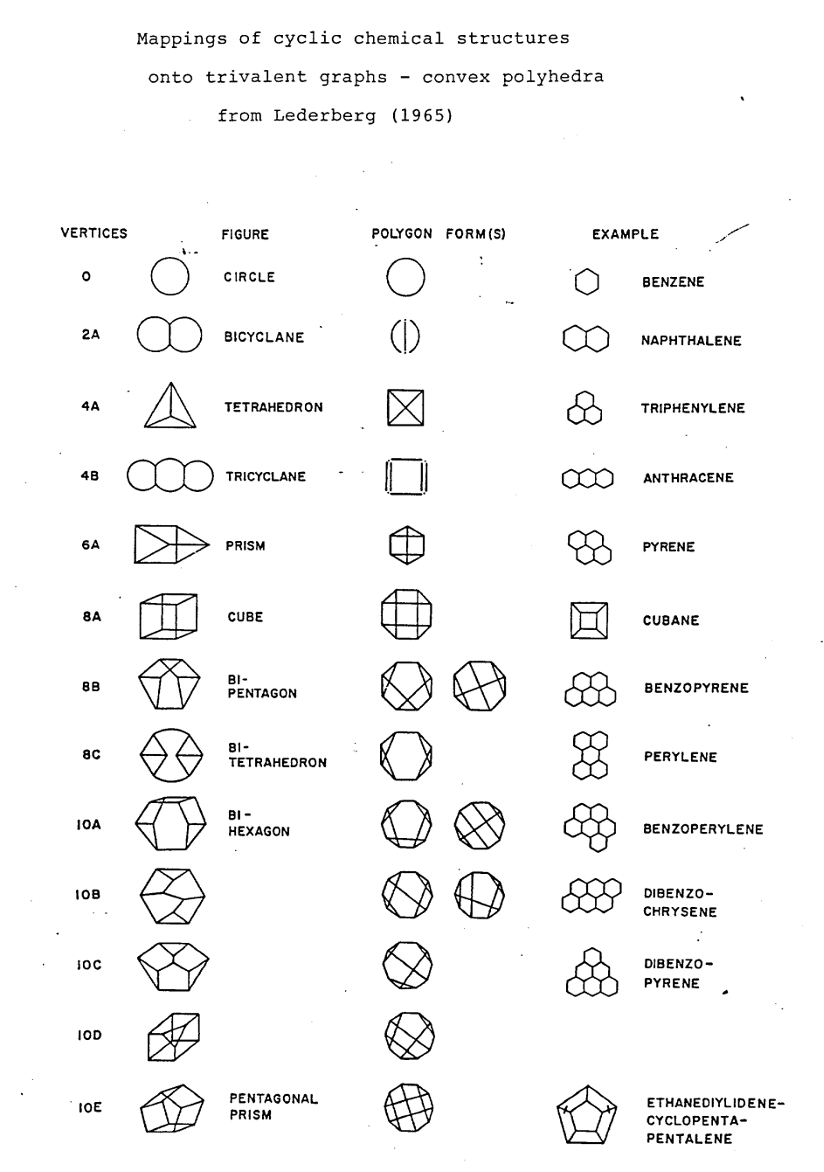

In 1965, the DENDRAL system was developed at Stanford University by Edward Feigenbaum and Joshua Lederberg, designed specifically for chemical analysis to help decipher organic molecular structures. Its success demonstrated that computers could do more than just compute—they could also perform expert-level analysis.

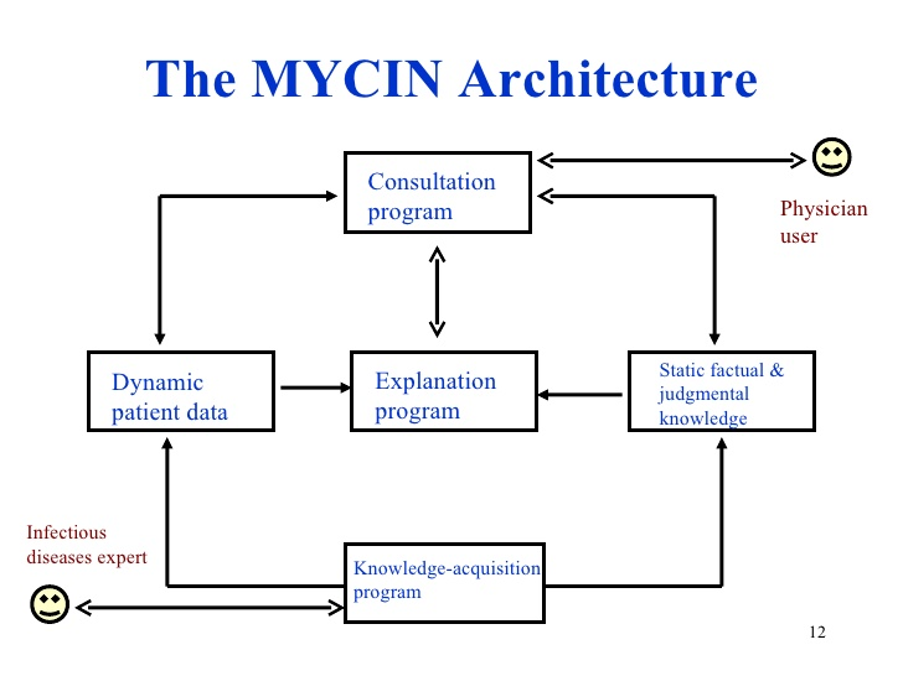

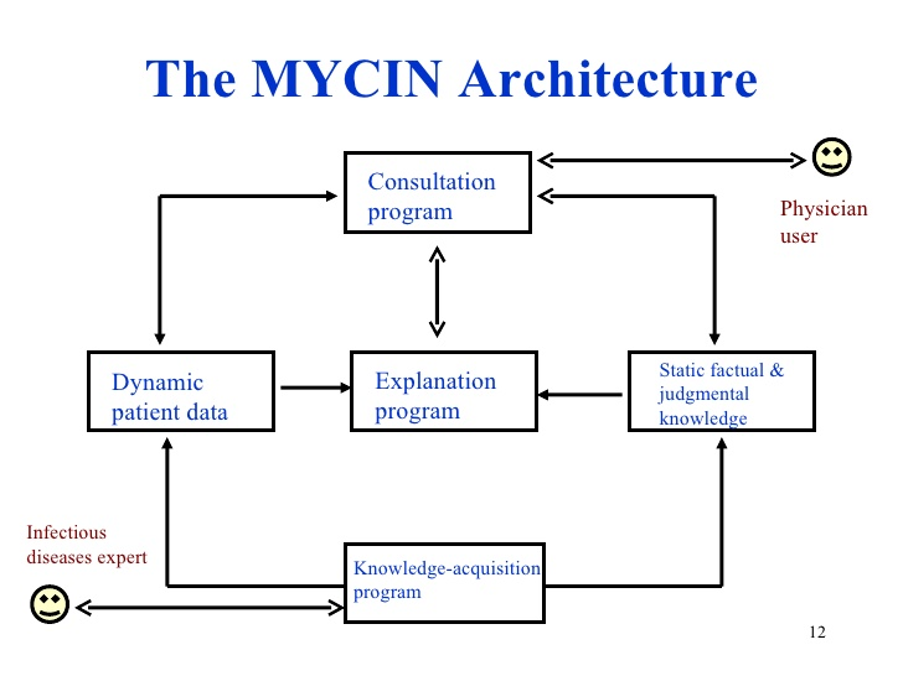

Following this, Feigenbaum’s team developed MYCIN, a system for diagnosing blood infections and recommending antibiotic treatments. MYCIN introduced uncertainty reasoning and rule-based reasoning, enhancing AI’s ability to handle complex tasks.

The image above maps ring structures in chemical molecules to trivalent graphs and convex polyhedra. The chart classifies structures by vertex count, illustrating the topology of simple benzene rings to more complex polycyclic aromatic compounds and demonstrating how they can be modeled using graph theory.

The image illustrates how MYCIN integrates dynamic patient data, static knowledge, and human-computer interaction, ultimately forming a closed-loop early expert system.

The success of DENDRAL and MYCIN showed that expert systems could surpass general reasoning systems in specific domains. This concept inspired further applications.

For instance, the PROSPECTOR system, developed by SRI International, was successfully used in geological exploration. At CMU, the XCON expert system, developed for Digital Equipment Corporation (DEC), automated computer system configuration, reducing errors that led to returns, repairs, and manual inspections, saving $40 million annually. Between 1980 and 1985, global AI investments exceeded $1 billion, making expert systems a major breakthrough in AI commercialization.

The device in this image is the Osborne 1, widely regarded as the world’s first commercially successful portable personal computer. Introduced by Osborne Computer Corporation in 1981, it marked the beginning of personal computing entering the mainstream market. The development of expert systems required specialized tools and languages (such as CLISP and LISP machines), while personal computers like the Osborne 1 accelerated the adoption of operating systems (CP/M, MS-DOS) and development tools.

Limitations of Expert Systems and the Second AI Winter

However, as expert systems became more widely applied, their limitations became apparent. The knowledge acquisition bottleneck restricted system expansion—expert knowledge had to be manually extracted and encoded by knowledge engineers, a cumbersome and difficult process to maintain.

Another challenge was the rule base expansion problem. As the number of rules increased, computational costs skyrocketed, reducing system efficiency. Additionally, expert systems heavily relied on manually defined rules and lacked adaptive learning capabilities, making them unsuitable for dynamic environments. The limitations of computer hardware also made expert systems difficult to scale.

Japan’s Fifth Generation Computer Project attempted to overcome symbolic AI bottlenecks, but its goals were overly ambitious. The project ultimately failed to meet expectations, becoming a major setback for the AI field. Governments and businesses scaled back investments, many expert system projects were shelved, and AI research fell into another downturn.

During this period, artificial intelligence faced two core challenges. The first was the interaction problem—traditional AI methods primarily simulated human deliberative decision-making, but struggled to achieve dynamic interaction with the environment, limiting their real-world applications. The second was the scalability problem—early AI approaches were well-suited for building domain-specific expert systems, but lacked generality, making them difficult to scale to larger, cross-domain complex systems.

These issues led to the failure of multiple AI research initiatives, marking another major setback in AI development. The field once again entered what became known as the “AI Winter.”

Learning from History: The Spiral Evolution of AI

At this point, the first phase of AI development had come to a close. From the theoretical explorations at the 1956 Dartmouth Conference to the rise of symbolic AI and expert systems, AI went through two cycles of boom and bust. Each technological breakthrough was driven by market and societal needs, and each AI winter forced researchers to rethink the direction of AI’s progress.

Today, many AI research paths still reflect the past. For example, agents can be seen as more advanced, interactive expert systems. Likewise, today’s multimodal large models, which hold the greatest promise for achieving Artificial General Intelligence (AGI), relate to agents much like general logical AI once related to expert systems—one pursues generalization, while the other emphasizes specialization. Additionally, the emerging field of neuro-symbolic AI seeks to combine the pattern recognition strengths of neural networks with the interpretability of symbolic logic, addressing their respective limitations to build more robust and explainable AI systems.

Looking at history, every leap in AI has relied not only on theoretical breakthroughs but also on market and societal demands. History has proven that AI’s value does not lie in theoretical completeness but in its ability to solve real-world problems. For this reason, when expert systems hit the knowledge acquisition bottleneck, the market swiftly shifted focus to data-driven machine learning.

In the next section, we will return to 1986, where the backpropagation algorithm marked a turning point in AI. We will explore how AI overcame the limitations of expert systems and, through a revolution driven by data and computational power, evolved from machine learning into today’s globally influential era of deep learning.

Join the AIHH Community!

Welcome to the AIHH (AI Helps Humans) Community! Join us in exploring the limitless possibilities of AI empowering everyday life!

👇 Scan the QR code below to join the AIHH community and stay updated on AI applications. 👇