II. The Evolution of Artificial Intelligence: A Paradigm Shift Driven by Computing and Data

Yan Li, Tianyi Zhang

The Evolution of Artificial Intelligence: A Paradigm Shift Driven by Computation and Data

In the late 1980s, artificial intelligence (AI) research underwent a significant paradigm shift, transitioning from rule-based systems to data-driven approaches. Early expert systems relied on meticulously constructed knowledge bases and inference rules, holding great promise. However, as their application scale expanded, challenges such as knowledge acquisition bottlenecks, rule explosion, poor adaptability, and computational resource limitations became increasingly evident, making it difficult for expert systems to handle complex and dynamic real-world environments.

At the same time, data-driven machine learning began to emerge. In 1986, the introduction of the backpropagation algorithm enabled multi-layer perceptrons (MLPs) to learn complex data patterns, reigniting academic interest in neural networks. With advancements in both data availability and computational power, AI research moved away from expert systems dependent on manually designed rules and shifted toward machine learning, with statistical learning and neural networks at its core. From this point onward, data and computation became the driving forces behind AI’s progress.

Neural Networks and Statistical Learning: Two Paths to Computational Intelligence

In the 1960s, neural networks were initially seen as the future of AI. However, in 1969, Marvin Minsky and Seymour Papert pointed out in their book Perceptrons that single-layer perceptrons were incapable of solving non-linear classification problems such as XOR. At the same time, multi-layer perceptrons lacked effective training methods, causing neural network research to stagnate for the next decade. In 1970, Paulo Werbos introduced the idea of using the chain rule to compute gradients in his doctoral dissertation, laying the foundation for backpropagation, though his work initially went unnoticed due to experimental limitations.

Throughout the 1970s and 1980s, continued advancements in computing hardware laid the groundwork for the revival of neural networks. In 1976, Intel released the 8086 processor; the same year, the Cray-1 supercomputer adopted vector computing to enhance data processing capabilities. In 1980, IBM introduced the first 1GB hard drive, significantly reducing data storage costs. In 1979, Oracle launched the relational database management system (RDBMS), revolutionizing data management. In 1983, ARPANET adopted the TCP/IP protocol, promoting data sharing. By 1986, MIT developed the Connection Machine (CM-1), designed specifically for neural network computation. Over this decade, computing power increased 100-fold, and storage capacity grew 40-fold, drastically improving data processing capabilities.

The image above shows the Connection Machine CM-1/CM-2 supercomputers, developed by Thinking Machines Corporation in the 1980s, designed for AI, simulation, and data analysis.

Against this backdrop, Geoffrey Hinton and his collaborators published their groundbreaking work on backpropagation in Nature in 1986, providing a practical training method for multi-layer perceptrons. In the same year, Hinton also introduced recurrent neural networks (RNNs) for processing sequential data, while Yann LeCun developed convolutional neural networks (CNNs) in 1989, pioneering computer vision research with the LeNet-5 architecture. With these advancements, CNNs and RNNs became the foundational architectures of neural networks.

Despite these breakthroughs, limited computational power prevented large-scale applications of neural networks. During this period, statistical learning began to take center stage, transitioning machine learning from theoretical exploration to practical applications. From the 1980s to the 1990s, decision trees played a crucial role in financial risk assessment and customer segmentation, support vector machines (SVMs) revolutionized gene expression classification in biomedical research, and hidden Markov models (HMMs) powered IBM and AT&T’s speech recognition systems. Bayesian networks transformed decision-making in medical diagnostics and fraud detection, while Markov random fields (MRFs) advanced image segmentation and pattern recognition in medical imaging and industrial quality control.

These statistical learning methods did not disappear with the rise of deep learning but instead continued to shape fields such as generative AI, reinforcement learning, and causal inference. The transition from neural network stagnation to the rise of statistical learning represented a pivotal shift in AI research, setting the stage for the emergence of deep learning in the 21st century.

Statistical Learning Took the Lead: Machine Learning Moved Toward Applications

From 1996 to 2009, AI progressed from theoretical research to practical engineering and commercialization, driven by advancements in computing power and the explosion of data availability. At the same time, statistical learning replaced symbolic AI as the dominant approach, bringing machine learning out of research labs and into real-world applications.

In terms of computational power, Intel’s 80486 processor, introduced in 1989, integrated a floating-point unit (FPU) for enhanced efficiency. In 2005, Intel and AMD introduced dual-core processors, marking the beginning of the multi-core computing era. In 1999, NVIDIA released the first GeForce GPU, which AI researchers later discovered could significantly accelerate model training through parallel computing. In 2006, NVIDIA launched CUDA, enabling GPUs to be used for general-purpose computing. By 2008, NVIDIA introduced the Tesla GPU series, specifically designed for high-performance AI computation, further advancing AI research.

The image above shows IBM’s Deep Blue, the computer that defeated world chess champion Garry Kasparov.

Data storage and management also saw significant progress. In the early 2000s, hard drive capacities expanded from 20GB to 1TB, and by 2005, the rise of flash memory technology and solid-state drives (SSDs) significantly improved data retrieval speeds. In 2007, Amazon launched AWS S3, shifting data storage toward cloud computing. Distributed storage systems and real-time data stream management technologies emerged, enabling the efficient handling of massive datasets needed for machine learning.

The proliferation of the internet further accelerated data availability. In 1991, Tim Berners-Lee invented the World Wide Web (WWW), which was commercialized in 1995, leading to rapid data growth. By the mid-2000s, Web 2.0 transformed the internet, shifting users from passive consumers to active content creators. The launch of YouTube in 2005 introduced video as a dominant content format, providing AI with rich datasets for image and video analysis. By 2008, Facebook’s data storage exceeded 2 petabytes (PB), solidifying data as the most critical fuel for AI research.

The image above shows the printed circuit board of a SanDisk Cruzer Titanium USB flash drive, featuring a Samsung flash memory chip (left) and a Silicon Motion controller chip (right).

During this period, machine learning algorithms matured and became widely adopted in various industries. In 2001, Leo Breiman introduced Random Forest, improving decision tree generalization, with applications in finance, healthcare, and industry. In 2003, LASSO regression was introduced, enhancing model interpretability. Meanwhile, deep learning began to emerge, as Geoffrey Hinton proposed Deep Belief Networks (DBNs) in 2006, although they remained experimental at the time.

Reflecting on 1996-2009, AI’s trajectory was clear: advancements in computational power and data growth propelled AI into a new stage. From CPUs to GPUs, HDDs to SSDs, Web 1.0 to Web 2.0, each technological breakthrough reshaped AI research and directly contributed to the rise of deep learning.

GPU Computing and the Internet Drove AI Transformation: The Revival of Deep Learning

After 2009, deep learning gradually replaced traditional statistical machine learning, driven by breakthroughs in GPU computing and the explosion of data. It became the core of AI research and achieved significant advancements in fields such as computer vision, natural language processing (NLP), speech recognition, autonomous driving, and recommendation systems. This period marked a critical transformation of AI from academic research to industrial applications.

The turning point came in 2009 when Google began using GPUs to train neural networks, ushering in a new era of AI research. In 2012, AlexNet utilized GPU-accelerated training for the first time in the ImageNet competition, dramatically improving image recognition accuracy and demonstrating the potential of GPUs for neural network computation. Soon after, NVIDIA introduced high-performance GPUs that significantly accelerated deep learning training. In the same year, AWS and Google Cloud expanded cloud AI computing, removing local hardware constraints for researchers.

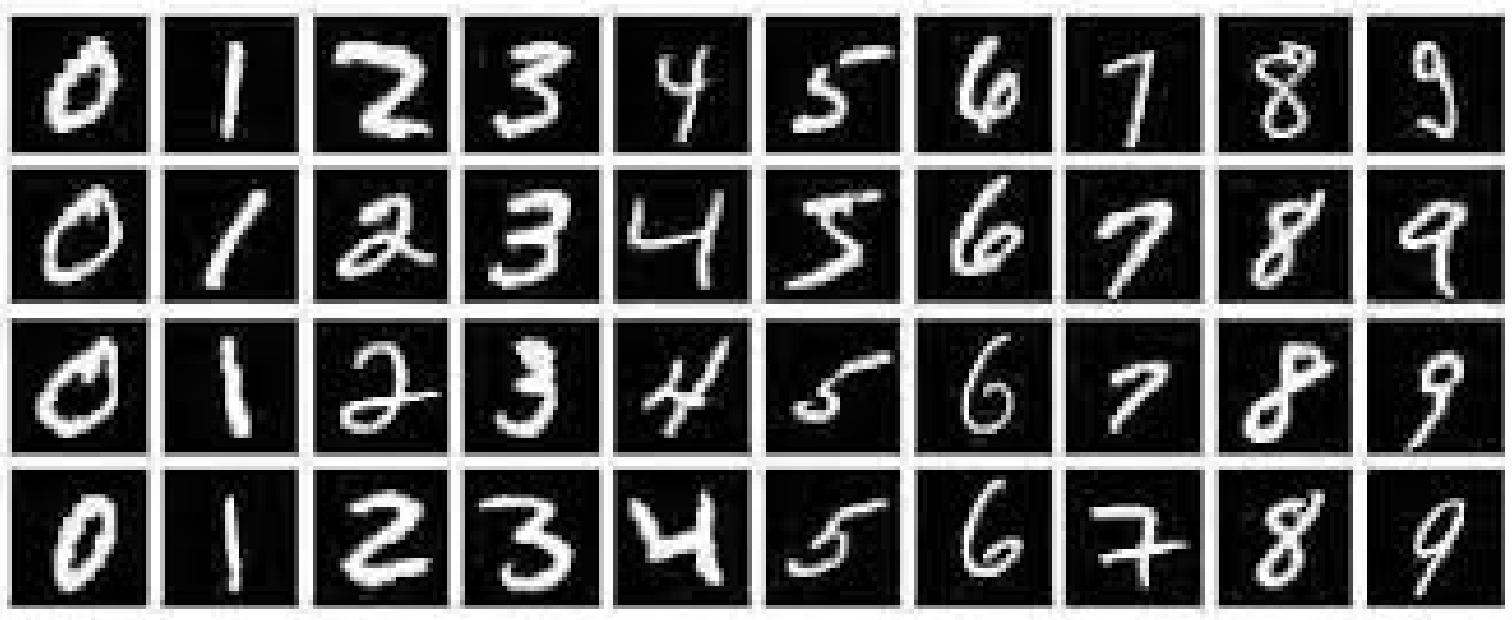

On the data front, the ImageNet dataset (containing 14 million images) was open-sourced in 2010, followed by Microsoft’s COCO dataset (330,000 images) for object detection in 2014. In NLP, Google released the One Billion Word corpus, and Wikipedia and Common Crawl became essential data sources. Meanwhile, social media platforms such as Twitter, YouTube, and Instagram generated massive datasets that researchers leveraged for AI model training. As data volumes surged, crowdsourcing data labeling platforms like Amazon Mechanical Turk emerged, solving bottlenecks in dataset annotation.

The image above shows the ImageNet dataset used for AI training.

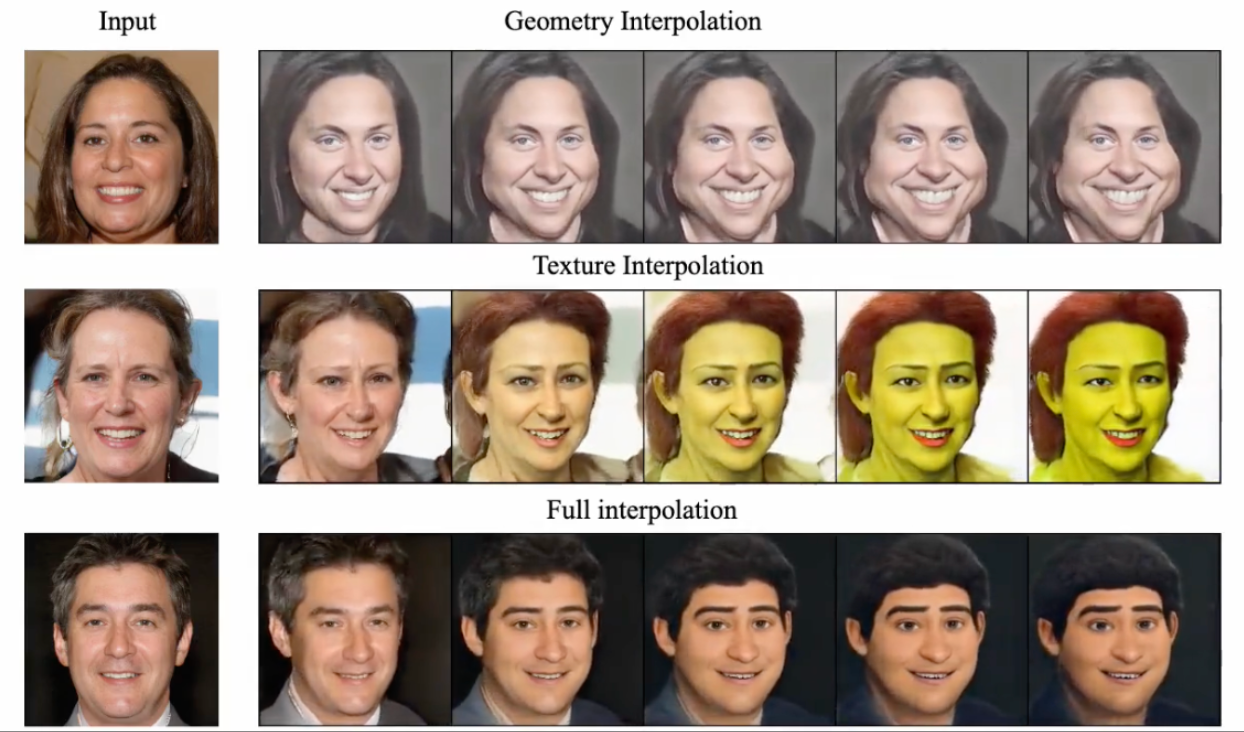

Thanks to advancements in GPUs and the explosion of available data, deep learning saw a series of groundbreaking innovations during this period. Hinton’s Dropout method helped mitigate overfitting, while Nair & Hinton’s ReLU activation function improved model training stability. In 2014, Ian Goodfellow proposed Generative Adversarial Networks (GANs), opening up new possibilities for AI-driven image generation and style transfer. In 2013, Google introduced Word2Vec, significantly enhancing language understanding in NLP tasks. In 2015, Microsoft’s ResNet addressed the issue of neural network degradation, reducing ImageNet classification error rates to 3.6%, surpassing human-level performance for the first time.

The image above illustrates an example of AI-driven style transfer.

These advancements in deep learning attracted global technology giants. In 2014, Google acquired DeepMind for $400 million and invested $1.2 billion in AI computing infrastructure. In 2015, AWS allocated over $2 billion to expand its AI cloud computing capabilities. Global AI investment skyrocketed from $30 billion in 2011 to $68 billion in 2015. Of this, corporate AI R&D investments exceeded $50 billion, while venture capital funding for AI startups reached $18 billion, fueling an unprecedented growth phase for the AI industry.

The rapid advancement in computing power, data availability, algorithm optimization, and corporate investment propelled AI into a high-speed development phase after 2015. This period set the stage for the emergence of Transformer models and large-scale language models, heralding the next revolution in AI.

The image above shows the Alexa voice assistant.

Data and Computation: The Foundation of AI’s New Intelligent Era

From 1986 to 2015, AI’s progress was consistently driven by the dual forces of data and computation. If market and societal demand served as external drivers shaping AI’s direction, then data and computation formed the internal foundation, defining AI’s capability limits. Each paradigm shift in AI—from the resurgence of neural networks to the dominance of statistical learning, and then the full-scale rise of deep learning—was accompanied by exponential growth in both computing power and data scale.

However, computing power, data volume, and market demand alone were not enough to propel AI to its current level of global adoption and industry penetration. The rapid transformation of AI from academic research to real-world industrialization and its role in a sweeping technological revolution were largely fueled by the rise of open-source culture. As computing capabilities, data resources, and algorithmic innovations converged, AI development moved beyond the domain of a few tech giants and entered an era where global developers, research institutions, enterprises, and communities collectively shaped the field. The emergence of open-source frameworks such as TensorFlow, PyTorch, and Hugging Face significantly lowered the barrier to AI research and applications, accelerating its widespread adoption and integration into everyday life.

The evolution of AI is not just about computation or data—it has ushered in an era of open collaboration and co-creation. In the next section, we will explore how open-source ecosystems have shaped AI’s development, examining how open-source frameworks, datasets, and community collaboration have accelerated AI’s technological iteration and facilitated the transition from laboratory research to real-world applications.

Join the AIHH Community!

Welcome to the AIHH (AI Helps Humans) Community! Join us in exploring the limitless possibilities of AI empowering everyday life!

👇 Scan the QR code below to join the AIHH community and stay updated on AI applications. 👇