III. The Open-Source Ecosystem: The Ultimate Force Reshaping AI Development

Yan Li, Tianyi Zhang

Over the past decade, artificial intelligence (AI) has experienced remarkable growth, fundamentally transforming various industries. In the previous two articles, we explored (1) how market and societal demands serve as external drivers accelerating AI’s rapid evolution, and (2) how data and computing power act as internal foundations that determine AI’s ultimate potential. However, beyond these two core factors, AI’s rapid advancement has also been fueled by a critical catalyst—open source. Open source has not only accelerated AI innovation and adoption but has also lowered technical barriers, enabling researchers, enterprises, and developers worldwide to efficiently share knowledge, improve algorithms, and apply AI across diverse scenarios. This article reviews the development of AI from 2015 to the present, illustrating how open source has become the ultimate force reshaping the AI landscape.

What is Open Source? From the Free Software Movement to the Modern Software Ecosystem

Open source refers to software or technology with publicly accessible source code, allowing unrestricted usage and modification. Its history dates back to the early culture of computer-sharing, evolving through the Free Software Movement, enterprise-driven open-source ecosystems, and now its deep integration with AI. However, software copyright has been a constant point of contention in the development of open-source technologies, leading to a long-standing debate between Copyleft and Copyright.

The Copyright vs. Copyleft dispute began in the 1980s. Copyright was formally established by the 1980 U.S. Computer Software Copyright Act, granting software developers exclusive rights to their code. As software commercialization grew, companies started restricting access to source code, sparking the Free Software Movement and giving rise to the GNU (GNU’s Not Unix) Project and the Free Software Foundation (FSF), which advocated for software freedom.

The image above shows a Computer Software Copyright Registration Certificate issued by the National Copyright Administration of China, certifying the software’s copyright ownership.

In 1989, Richard Stallman introduced the GNU General Public License (GPL), formally incorporating the concept of Copyleft, which required all derivative works to remain open-source. While GPL later became the foundation of critical projects like Linux, its strict requirements initially deterred some enterprises from adopting it.

The image above shows Richard Stallman delivering a speech at MIT during the release of the first draft of GNU GPLv3.

The term “open source” emerged in the 1990s as a response to enterprise needs, leading to more flexible licensing models and widespread adoption of technologies like Apache, MySQL, and PHP. Linux, in particular, became a crucial infrastructure for the tech industry. In 2008, GitHub was founded, accelerating the growth of the open-source ecosystem by lowering collaboration barriers and integrating enterprises with developer communities. As a result, open source gradually became an industry standard.

Today, the balance between open-source ecosystems and commercialization has become a defining feature of technological evolution. The strict nature of GPL licensing has led to its decline in modern software projects, while more permissive licenses like MIT and Apache have gained widespread adoption due to their compatibility with commercial applications. In the AI era, open source has expanded beyond traditional operating systems and databases to include algorithm architectures, pre-trained models, developer toolchains, and training datasets, forming the backbone of AI technology. By 2015, open source had already become a driving force behind AI advancements. With the rise of deep learning, it has become the key enabler of AI’s explosive growth.

From Open-Source Frameworks to Model Sharing: The Driving Force Behind AI’s Boom

Since 2015, open source has become the key driving force behind AI’s rapid development. Without open-source deep learning frameworks, shared models, and community collaboration, AI would not have progressed at such an extraordinary pace. While AI research has long benefited from academia’s culture of knowledge sharing, large-scale code open-sourcing truly began in the 2000s and early 2010s, with tools like Scikit-learn (2007), Theano (2010), and Caffe (2013) lowering the barrier to machine learning. However, deep learning remained a niche field.

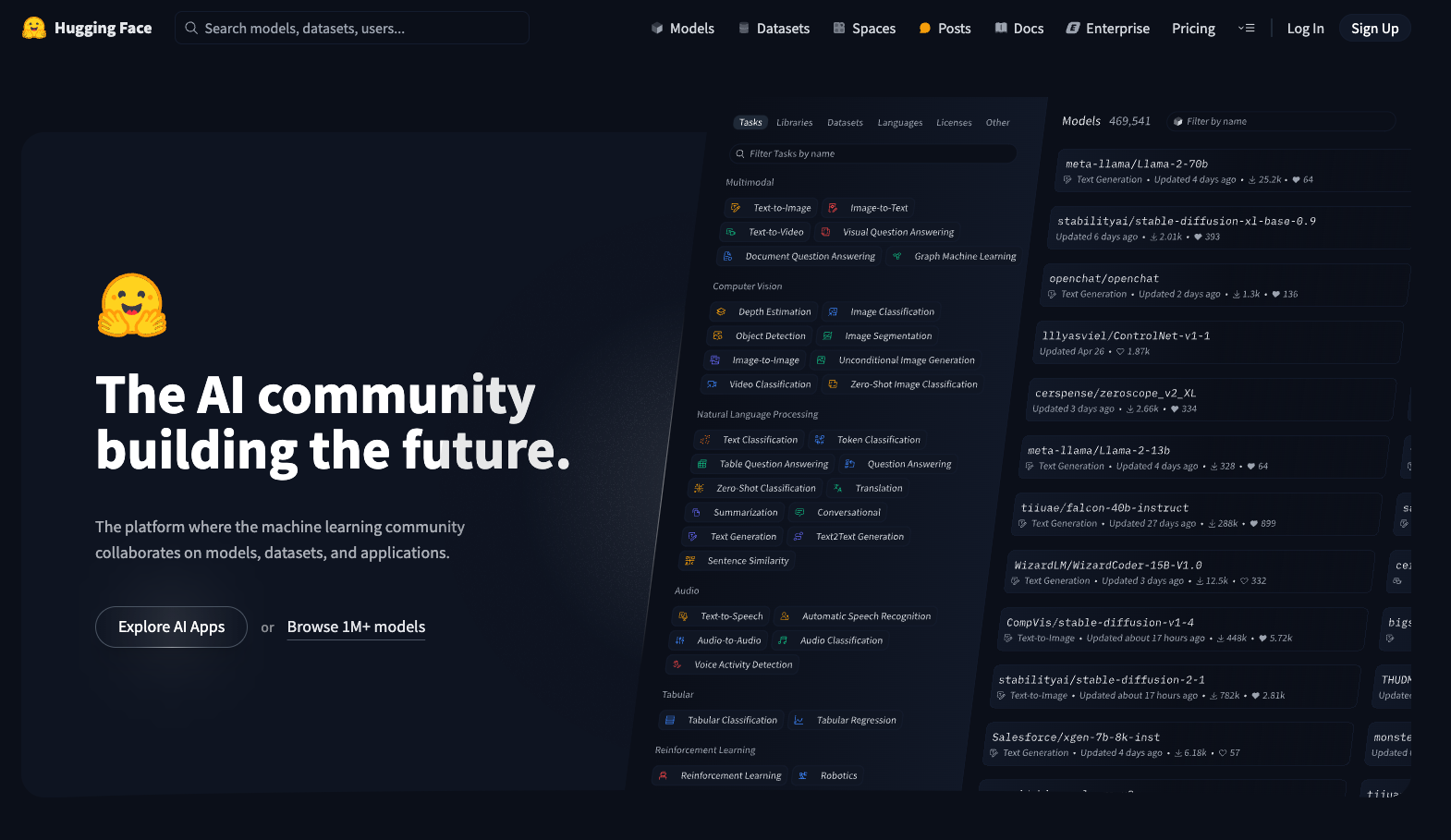

In 2015, Google released TensorFlow, making enterprise-grade deep learning tools accessible to the public and revolutionizing AI development. In 2016, Facebook (now Meta) launched PyTorch, which gained rapid adoption in academia due to its dynamic computation graphs and intuitive API. PyTorch eventually surpassed TensorFlow as the industry’s leading framework. In 2018, Hugging Face entered the NLP domain, promoting open AI model sharing and facilitating the widespread adoption of pre-trained models like BERT (2018) and GPT-2 (2019), accelerating the development of large-scale language models (LLMs). In 2019, OpenAI released GPT-2, initially withholding full open-source access for “safety” reasons, but later making it available to the community, reducing AI research barriers and driving further innovation.

From 2020 onward, open source has become even more crucial in the era of large-scale AI models. OpenAI launched GPT-3 in 2021 without releasing its model parameters, raising concerns about AI becoming increasingly closed-source. In response, Meta released LLaMA in 2023, and Mistral launched Mistral 7B, reinforcing the open-source AI movement. Meanwhile, the rise of Stable Diffusion (2022) and other open-source generative models democratized AI applications like image generation and automated editing. These open-source projects have empowered developers worldwide to innovate independently rather than being restricted by a handful of corporate-controlled AI models. This spirit of open collaboration has ushered AI into an era of unprecedented prosperity, with new models and methodologies emerging at an accelerating pace.

The image shows an AI-generated NFT (Non-Fungible Token) artwork, created in the style of the Bored Ape Yacht Club (BAYC) series. It has attracted significant attention in the digital art and blockchain communities.

Open source not only makes AI research more open and efficient but also fosters global collaboration in AI technologies. The Hugging Face Transformers library enables researchers worldwide to use state-of-the-art AI models published by others with just a few lines of code. Platforms like GitHub and arXiv facilitate the instant sharing of papers and code, accelerating the dissemination of AI advancements. Community-driven organizations such as EleutherAI and Stability AI have trained large open-source models through crowdsourcing, helping to reduce the risks of centralization in the AI field. This open collaboration model has shifted AI research from the labs of a few companies to a global network of developer-driven innovation.

However, some AI giants, after benefiting from open source, have gradually moved toward closed-source strategies in an attempt to monopolize advanced technologies. OpenAI is the most notable example. Founded in 2015 with a mission to democratize AI, OpenAI initially released a series of open-source tools. But after receiving investment from Microsoft, the company changed its strategic direction. In 2020, GPT-3 was made accessible only via API, and by 2023, GPT-4 became fully closed-source, tightly integrated into Microsoft’s Azure ecosystem. This marked OpenAI’s transformation from a research institute advocating for open technology to a commercially driven, closed company.

The battle between open source and closed source will continue, but the trend is becoming clear: True progress in AI will not come from the closed-door decisions of a few tech giants, but from the collective efforts of a global open-source community. Companies like Meta, Mistral, and Hugging Face remain strong advocates for open source and are driving the decentralization of AI. For them, open source is not just a technical choice—it’s a belief: that AI development should be more fair, transparent, and sustainable. At the same time, new open-source forces like DeepSeek are emerging rapidly. History will show that closed systems only slow down innovation. The future belongs to open-source AI—those who share technology and embrace the community.

The image shows the homepage of the Hugging Face platform. As a core community for AI, Hugging Face supports collaboration among machine learning developers on models, datasets, and applications.

Breaking the AI Monopoly: DeepSeek’s Open-Source Leap Through Distributed Innovation

Since OpenAI fully locked down GPT-4 and the GPT-o1 series in 2023, the global AI community has found itself caught in a wave of collective anxiety over technological monopolies. In contrast, the Chinese team DeepSeek has taken a fundamentally different path: they not only released the full parameters of their next-generation large language models, DeepSeek-v3 and DeepSeek-R1, but also adopted the MIT open-source license, which is friendly to commercial use. This allows all developers to freely access the models and even distill R1 to train their own systems. In comparison, OpenAI’s closed strategy appears increasingly conservative and vulnerable in the face of growing calls for algorithmic transparency.

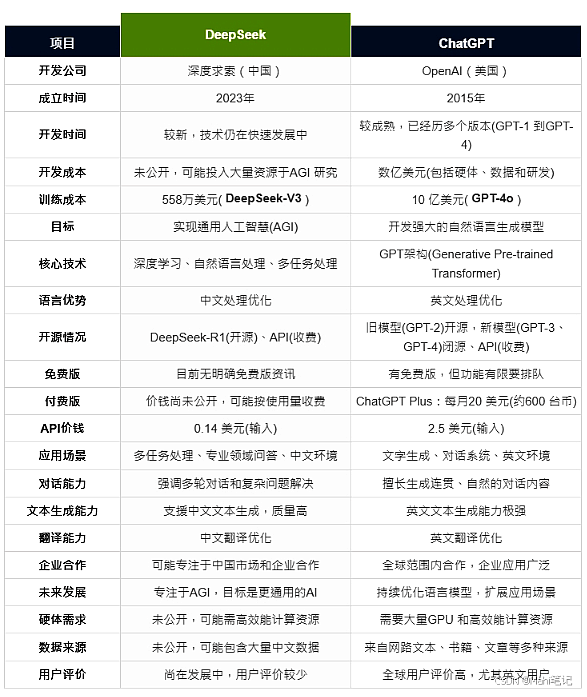

Official evaluations show that DeepSeek-R1 performs on par with OpenAI’s closed-source commercial model OpenAI-o1-1217 on tasks involving mathematics, programming, and multidisciplinary knowledge. In fact, on certain highly challenging reasoning benchmarks, it has shown signs of catching up—or even slightly surpassing. Remarkably, the total training cost of DeepSeek-R1 was only $5.6 million, a fraction of the hundreds of millions—or even billions—invested by major U.S. tech giants in AI development. In terms of pricing, DeepSeek-R1 offers services to developers at just 1–4 RMB per million tokens, which is around 1/30th the cost of GPT-o1.

The image compares DeepSeek and ChatGPT in terms of company background, founding time, technical architecture, training cost, application scenarios, and inference capabilities. Data source: https://blog.csdn.net/Mahi6868/article/details/145706373, for reference only.

Compared to open-source projects, closed-source strategies are increasingly revealing a range of issues. A large number of developers prefer to use open-source models—with full transparency—for secondary development. In contrast, more than a year after GPT-4’s release, the lack of public knowledge about its internal mechanisms has led to a stagnation of innovation. There has been no significant generational upgrade, and the growth rate of its API usage has also begun to slow. By comparison, DeepSeek’s transparency has enabled breakthrough progress in areas such as community auditing, dynamic content filtering, and traceable watermarking.

As the open-source wave continues to challenge closed systems, many organizations and communities are accelerating efforts to promote the decentralization of the global AI ecosystem. DeepSeek’s advocacy for distributed innovation aligns well with the current pursuit of technological and data sovereignty. As techniques such as model distillation and reinforcement learning become increasingly mature, the so-called “technological barriers” are gradually being dismantled by the collective power of the open-source community.

Open-Source Coexistence: The Inevitable Path to an Advanced AI Civilization

As AI technology evolves into the “new electricity” of the digital age, the competition between open-source and closed-source models is no longer merely a debate over technical approaches—it is a fundamental decision shaping the trajectory of human intelligence and civilization.

History has repeatedly shown that closed systems eventually stagnate, while open ecosystems thrive through interconnected evolution. From Hugging Face’s AI model repository surpassing millions of daily downloads to DeepSeek-R1 achieving performance comparable to closed-source models at just 1/30th the cost, these developments collectively paint a vision of a decentralized future: technological barriers are dismantled under open-source protocols, computing resources flow freely across distributed networks, and innovation shifts from corporate data centers to individual developers’ workstations.

The ultimate value of open source lies not only in lowering technical barriers but also in establishing a framework for a global intelligence community—one where algorithms undergo public scrutiny, innovation follows open standards, and AI benefits extend to broader populations. This may well be the ultimate answer to AI’s development: when AI becomes a shared asset of humanity, technology can truly serve everyone.

Join the AIHH Community!

Welcome to the AIHH (AI Helps Humans) Community! Join us in exploring the limitless possibilities of AI empowering everyday life!

👇 Scan the QR code below to join the AIHH community and stay updated on AI applications. 👇